Face Mask Detection

Summary

In an investigative foray into face mask detection utilizing YOLOv4, this study has successfully crafted a model with a mAP of 73.62% at an IoU threshold of 0.50. A comprehensive review of contemporary real-time object detection algorithms, including SSD and YOLO variants, established YOLOv4 as the preeminent choice in terms of speed and accuracy, even when juxtaposed with Google’s EfficientDet and Facebook’s RetinaNet/MaskRCNN.

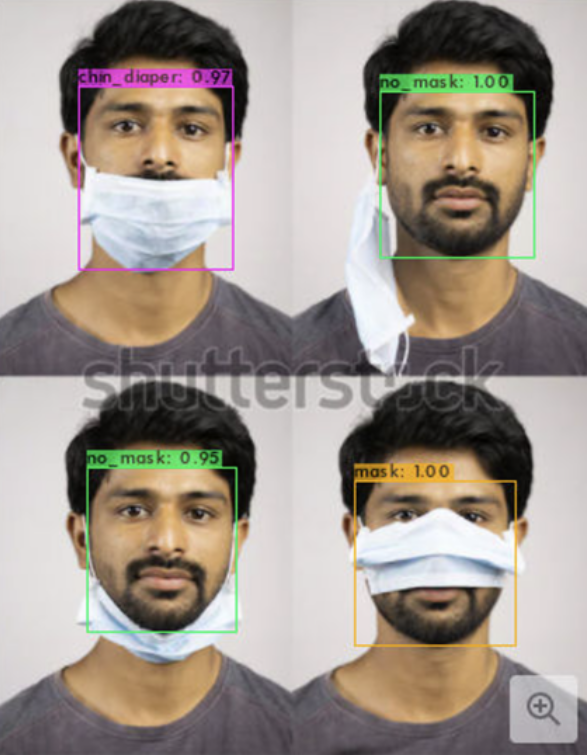

The model demonstrated exceptional proficiency in detecting correctly worn face masks, achieving an impressive mAP of 90.08%. It also showed reasonable effectiveness in identifying partially visible faces. However, the detection of improperly worn masks, colloquially termed ‘chin diapers,’ was less robust, a limitation attributed to the limited diversity in the training dataset. The study suggests that future enhancements could include the development of a more comprehensive dataset and the integration of advanced data augmentation techniques.

Crucially, the study underscores the importance of high accuracy in identifying individuals correctly wearing masks as they are the primary focus for admission into monitored spaces. The ability of the system to flag individuals not wearing masks or wearing them incorrectly is a pivotal feature, analogous to a security officer’s role at the entrance.

Facemask detection dataset taken from Kaggle

- 853 labelled images

- Three classes

Image Augmentation

- Randomly applied 2 photometric and geometric distortions per training image

- 2200+ images total

Fine Tuning

- Batch size = 64

- Max batches = 6000

- Steps = 4800,5400

- Classes = 3 in the YOLO layers

- Filter size change to (classes + 5) x 3

Training on Google Colab

- Saved weights at every 1000 iterations

Example Detections